As you read this, neurons in your brain are creating your sensory experience of the world through coordinated patterns of electrical activity. All of your perceptions — what you see, what you hear, etc. — arise through this physical process.

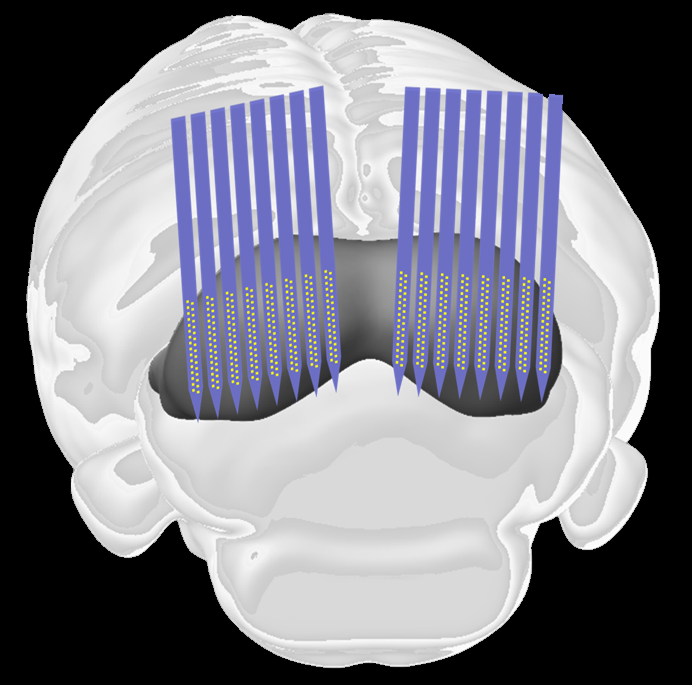

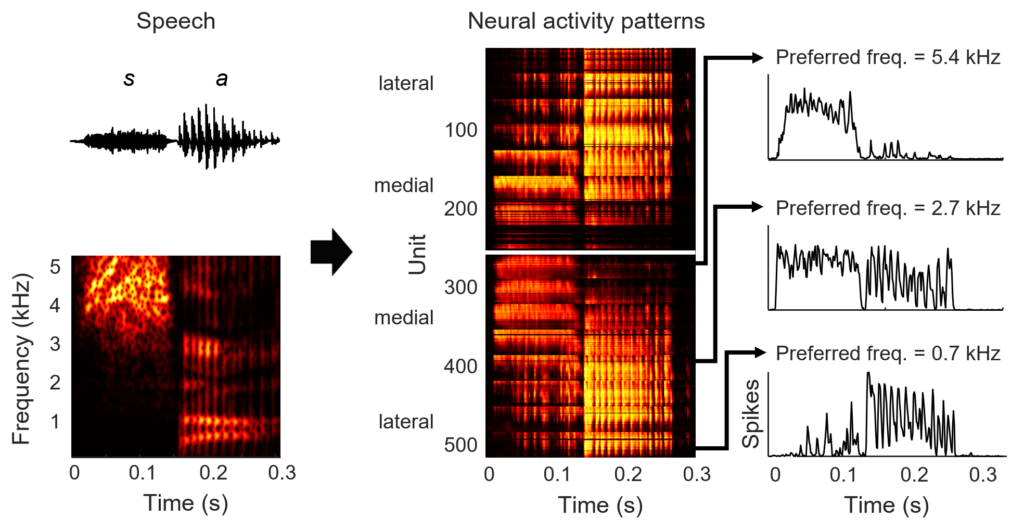

The brain forms an internal representation of the outside world — a neural code — that determines how sensory inputs are perceived. These activity patterns that govern perception are embedded in the brain’s neural networks at fine spatial and temporal scales (micrometers and milliseconds). To study the neural code at the required scale and resolution, we have developed animal models of human hearing along with measurement technology that allows us to monitor the electrical activity of thousands of individual neurons simultaneously. This provides us with a unique resource that we can use to characterize and manipulate the mapping from raw sounds to complex downstream neural activity patterns.

We are using this resource to study the function of neural networks in the auditory system and how they are disrupted by hearing loss. Hearing loss is not typically caused by a single pathology, but rather by a complex pattern of changes to the ear and brain that are driven by noise exposure and aging. Despite decades of research, the links between these physiological changes and the perceptual deficits that accompany hearing loss remain poorly understood. Current hearing aids often fail to help but, because hearing loss is such a complex condition, it is not currently clear how they can be improved.

We believe that our approach of combining large-scale neural recordings with computational modelling to link physiology to perception via the neural code will lead us to a better understanding of both normal hearing and hearing loss and provide new ideas for improving hearing aids and other treatments.

Project: Transforming hearing aids through large-scale electrophysiology and deep learning

EPSRC-funded collaboration with UCLH BRC and Perceptual Technologies Ltd.

As the impact of hearing loss continues to grow, the need for improved treatments is becoming increasingly urgent. In most cases, the only treatment available is a hearing aid. Unfortunately, many people with hearing aids don’t actually use them, partly because current devices, which are little more than simple amplifiers, often provide little benefit in social settings with high sound levels and background noise. The common complaint of those with hearing loss, “I can hear you, but I can’t understand you”, is echoed by hearing aid users and non-users alike.

The idea that hearing loss can be corrected by amplification alone is overly simplistic; while hearing loss does decrease sensitivity, it also causes a number of other problems that dramatically distort the information that the ear sends to the brain. To improve performance, the next generation of hearing aids must incorporate more complex sound transformations that correct these distortions. This is, unfortunately, much easier said than done. In fact, engineers have been attempting to hand-design hearing aids with this goal in mind for decades with little success.

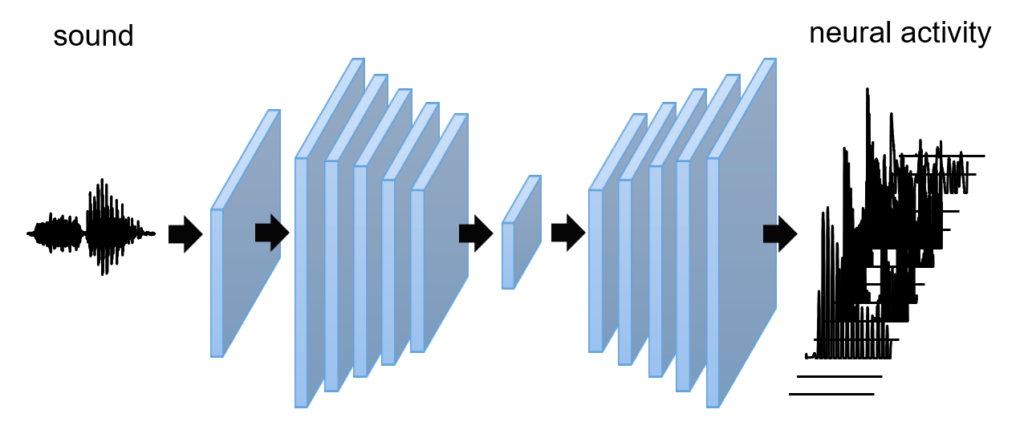

Fortunately, recent advances in experimental and computational technologies have created an opportunity for a fundamentally different approach. The key difficulty in improving hearing aids lies in the fact that there are an infinite number of ways to potentially transform sounds and we do not understand the fundamentals of hearing loss well enough to infer which transformations will be most effective. However, modern machine learning techniques will allow us to bypass this gap in our understanding; given a large enough database of sounds and the neural activity that they elicit with normal hearing and hearing impairment, deep learning can be used to identify the sound transformations that best correct distorted activity and restore perception as close to normal as possible.

Project: Characterizing the effects of hearing loss and hearing aids on the neural code for music

MRC-funded collaboration with Alinka Greasley (University of Leeds)

For many people, the ability to enjoy music is essential for their wellbeing. Unfortunately, this ability is increasingly at risk because of the growing burden of hearing loss. Current hearing aids, which are designed to improve the perception of speech, often distort music. But without a detailed understanding of the auditory processing of music and how it is disrupted by hearing loss, it remains unclear how the benefits of hearing aids for music can be improved.

We are studying the neural code for music with and without hearing loss and hearing aids to characterize the internal representation that underlies normal and impaired perception, and to provide specific design targets for improved hearing aids. To facilitate the linking of our results to the real-world experiences of human listeners, we are also conducting a large-scale survey of hearing aid users regarding their music perception. We expect the results of this project not only to yield new fundamental knowledge about neural coding but also to provide a foundation for the development of improved assistive listening devices and software that can significantly improve the perception and enjoyment of music for listeners with hearing loss.